Shadow AI refers to the use of AI tools by working professionals without the approval of the company’s leadership. It may not necessarily be done with bad intentions but because teams and individuals are just trying to get their work done faster and stay ahead of their deadlines.

The issue is that teams are using these AI tools outside any compliance review by the organization. This is either because the organization hasn’t built a clear approach for integrating AI into daily work or because the risks of shadow AI just aren’t being prioritized.

Risks of Shadow AI

The dangers of shadow AI aren’t always immediate. They tend to show up slowly through the consequences when data slips into the wrong hands and compliance issues that come at a big cost.

Here is a breakdown of two major risks of Shadow AI in businesses.

Operational Risk

A well-known case that highlighted the operational risks of shadow AI comes from Samsung. Some of their engineers started using ChatGPT to help with day-to-day tasks, like debugging code and summarizing internal documents. In the process, they accidentally shared sensitive source code and meeting notes without any approval or checks in place.

As a result, Samsung banned external AI tools across teams and started working on their own in-house alternative with better control.

Legal and Compliance-Related Risks

Issues around AI copyright and ownership complicate how data and IP are handled when using external tools. If there are no policies in place at an organizational level, the legal impact of Shadow AI escalates very quickly.

For instance, consider the scenario below

- Employees may unknowingly input private client data, financials, or Intellectual Property into public AI tools.

- These tools often store, process, or learn from that data, putting the company at risk of violating data laws.

Here’s where it gets serious:

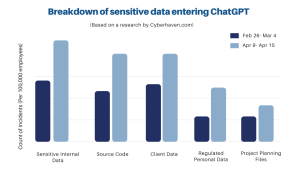

- A report by Cyberhaven found that 11% of the data employees paste into ChatGPT is confidential, including internal business details and client information.

- Non-compliance with data protection laws can be expensive. Under the GDPR, companies face fines of up to 4% of their global annual revenue. In the U.S., the CCPA imposes penalties up to $7,500 per intentional violation, without a cap on total fines.

Most startups haven’t set clear boundaries or systems around AI use yet. That’s understandable, but ignoring it won’t make the risk go away. We will discuss how startups can tackle shadow AI by the end of this article.

Startups VS Enterprises. Which are More Vulnerable to Shadow AI

At first glance, large enterprises might seem more exposed to shadow AI. But early-stage startups are often more vulnerable, not because they use more AI but because they lack the structure to manage it.

Here’s why

- AI adoption happens earlier than policy: In fast-moving teams, tools like ChatGPT, Claude, or Midjourney often become part of the workflow before leadership even realizes it. By the time founders are thinking about policy, the behavior is already embedded.

- Every mistake is magnified: Unlike large enterprises with legal defenses and PR teams, startups feel the consequences immediately. One mistake, like leaking pitch decks or customer data, can derail funding or spark legal trouble that founders aren’t prepared for.

We’ve covered what shadow AI is and the risks it brings to businesses. Now, let’s look at some practical ways to actually tackle it.

Real-World Examples of Shadow AI Consequences

- Developer Integrated Unapproved AI Translation Tool

A developer quietly integrated an AI translation tool into a customer portal without a security check. The tool had known vulnerabilities that attackers later exploited. The result: customer conversations leaked, service was disrupted, and the company took a financial hit that could’ve been avoided with even minimal oversight. - Employees Feeding Sensitive Data into Chatbots

Cyberhaven’s report found that employees at tech companies are regularly using tools like ChatGPT and Gemini through personal accounts, bypassing any IT controls. Among the data shared, customer support logs made up over 16%, source code around 13%, and R&D material close to 11%. All of it going into public AI models with zero oversight or audit trail. - Customer Service Agents Using Unauthorized Generative AI Tools

Zendesk found that nearly half of customer support agents are using tools like Copilot or ChatGPT without company approval. They’re trying to move faster, but without proper vetting, that speed comes at the cost of data control and compliance visibility.

A Practical Solution to Tackle Shadow AI

You can’t stop people from using AI tools, but you can build a system around it. The goal isn’t to block innovation. It’s to keep things safe, trackable, and aligned with company goals.

Here’s a simple but workable approach to tackle shadow AI

1. Start with a survey

Create a short internal form to understand the extent to which shadow AI has penetrated your workplace. Consider asking questions like the ones listed below:

- What AI tools have you used in the last 30 days?

- What is the purpose of using a particular tool?

- Did you input any internal data?

Assure your team members that the information will be kept anonymous. As per a report by Microsoft, 52% of those who use AI for their work are reluctant to admit it.

So, make it clear to your team that the goal is not to police AI use but to get a real picture of what’s happening so that better systems can be built around it.

2. Set up browser-level visibility with employee consent

Use tools like Dope Security, Netskope, or Cyberhaven to get visibility into how AI tools are being used across your team. These tools can detect when someone is pasting data into public AI models like ChatGPT.

Consider these simple actions:

- Begin with monitoring:

Let the tool run silently in the background. No alerts, no restrictions. Just gather data on what AI apps are being used and what kind of internal content is being pasted into them. This gives you a clear picture without alarming your team. - Move to flagging risky patterns:

Once you see the usage trend, set up custom alerts for specific behaviors. For example, copying data from CRM tools or internal dashboards into ChatGPT. The tool will flag this activity so you can follow up if needed. - Avoid hard blocks (unless there’s a serious breach):

Don’t immediately restrict access. If you block tools too early, people will switch to using personal devices where you have no visibility. Keep hard blocks only for repeat violations or critical data types like unreleased financials.

3. Define and communicate what can’t be shared

Instead of vague “don’t share sensitive data,” give examples like:

- Client names and emails

- Unreleased product screenshots

- Pitch decks or investor updates

- Legal documents or contracts

Put this into a simple internal doc. Notion or Google Docs work fine. Link it in onboarding, Slack, or any project management tool you use.

4. Offer internal AI alternatives wherever possible

Use OpenAI Teams or Claude Pro with company-controlled access. Lock it behind SSO. You can control logs, reset contexts, and reduce exposure.

For internal Q&A, use tools like:

- Glean or Dashworks (for private AI search)

- Custom RAG-based bots using your own knowledge base (built with tools like LangChain or LlamaIndex)

This approach gives people a safe place to explore without running to public tools and risking sensitive information of the organization.

5. Review access monthly

Set up a lightweight AI usage audit that repeats monthly. Here’s how startups and enterprises implement this:

- Export browser logs from your endpoint security tool (like Dope Security or Cyberhaven) and filter for AI domains. Sort by frequency and user.

- Update an internal log to document new AI tools spotted, who’s using them, and whether internal data was involved.

- Mark each one for either approval, sandbox testing, or deprecation. If a tool involves sensitive workflows, loop in legal or security before making the call.

Once decisions are made, share an internal summary regarding what’s now approved, what’s restricted, and why. This keeps everyone in the loop and builds trust, and ultimately tackles the risks of shadow AI to a great extent.

Final Thoughts

Shadow AI is already inside most workflows, but it just hasn’t been acknowledged yet.

The real risk isn’t just leaks or fines. It’s losing track of how work gets done. And when that happens, even small missteps can lead to expensive problems.

Tackle Shadow AI by first acknowledging it and then offering safer alternatives to get the work done with the same level of convenience. Most importantly, review your approach regularly to keep the risks visible before they turn into real damage.